Welcome to Andrea Sabariego's projects&process website.

A. Sabariego

Performance&Interactive Media Arts

Face tracking&video mapping

Proyecto de proyección interactiva mediante reconocimiento facial con el uso del sensor infrarrojo Kinect y el software max/msp.

Preliminary ideas

The idea is to project on a performer's face while they are moving on stage. So the face needs to be tracked in real time. Ideally there will be two performers: one that is moving, and the other is performing standing in front of a camera. That image is projected on the moving performer's face.

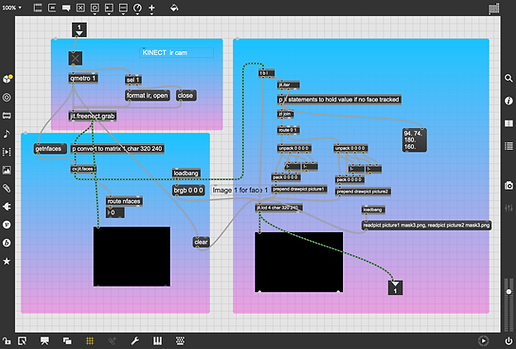

Face tracking in Max/msp

For face tracking in Max I have used the cv.jit library by Jean-Marc Pelletier, a collection of max/msp/jitter tools for computer vision applications.

https://jmpelletier.com/cvjit/

This object cv.jit.faces makes it really easy to track the faces. Then you can take the x and y position and use it to move other video layer in jit.world and output it via syphon.

The issue---- After testing I realized that I can't track the face and project over the same surface that I am tracking. This is because to track the face with a regular camera you need light, and lifthere is a lot of light I can't project.

The next step is figure out how to do it with a ir camera like the one in the Kinect sensor.

Progress

May 8th update -- I tried the tracking with a powerful projector that would allow to project in lighted situations but then, the camera I was using (webcam) in which I can't change the parameters, would not see the face due to the amount of light.

May 11th update -- Ryan helped me with a patch that uses the infrared camera in the kinect to do the tracking. In the first test the tracking was done beautifuly in front of my computer. Kinect IR camera tracks the face and outputs a projection at a good frame rate that follows the face.

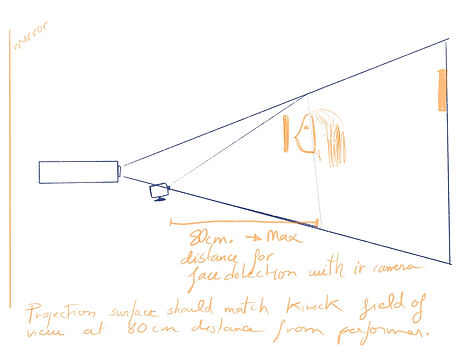

The issues came when I tried to set a "stage" to work on a performance: at long distances Kinect IR (infrared) does not see the face. The maximum distance that the performer can be from the Kinect is around 80cm (30 inches).

Now is time to calibrate distances and where to locate kinect&projector.

In order to match the projection with the face, the projection area should be equal to the field of view of the kinect. NOTE: I am using kinect 1 with a Macbook via Jit.freenect object in Max/MSP.

May 15th update -- Unfortunately I have covid and have to be under quarentine until after my final presentation. I had planned to have a collaborator performer to help me with the calibration and live experimentation, but this is not an option now so I guess I have to do it all by myself, and when I feel better.

For that purpose I have set the "studio" in a room where I have a mirror so I can monitor the projector, the computer, the kinect and myself... good news is I am finally using the ironing table!

May 18th update -- Find below some video of the test that I have done. The matching of the projection and the performer is a pain in ***. I have tried multiple positions for the camera and the projector but I haven't found the right formula. Maybe hanging the projector would give me a better angle to work with the kinect close enough to not interfere with the projection, but I don't have a proper place to hang it now. The tracking up and down kinda works adjusting the Y position in the patch, but the X position is always off track.

Then I thought I could make it up using a second mask for the tracking and try to play with it.

Then I went crazy.

Do I need a plan B?